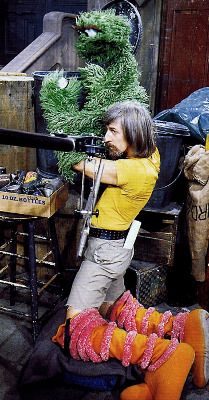

Pay no attention to the man behind the curtain

The mythology of technology is powerful, but also kind of superficial. It usually doesn’t take much to move past the marketing and vapour ware to understand whether a tool is useful or not.

With AI this has been a bit more challenging, as the opaqueness of the tech often makes it difficult for us to scrutinize or understand what is taking place behind the scenes. Similarly the language and hype around AI deliberately hides or downplays the role of humans in making these automated systems work.

Yet over the last few years, the AI hype machine has started to falter, and the mystique surrounding AI has waned. That doesn’t mean the technology will go away, far from it. However our understanding of it, and more importantly our relationship with it, is starting to evolve substantially.

Perhaps this begins with a sober acknowledgement of the shortcomings of AI, that undermine many of the promises and potential associated with it.

Researchers studied how the labels applied to 19 key #AI/#ML image datasets affect an algorithm trained on them. Labels on images used to train #machineVision systems are often wrong - could mean bad decisions by self-driving cars, medical systems, etc. https://t.co/010CyHawTZ

— Cherri Pancake (@pancakeACM) April 8, 2021

THE CURRENT BOOM in artificial intelligence can be traced back to 2012 and a breakthrough during a competition built around ImageNet, a set of 14 million labeled images.

In the competition, a method called deep learning, which involves feeding examples to a giant simulated neural network, proved dramatically better at identifying objects in images than other approaches. That kick-started interest in using AI to solve different problems.

But research revealed this week shows that ImageNet and nine other key AI data sets contain many errors. Researchers at MIT compared how an AI algorithm trained on the data interprets an image with the label that was applied to it. If, for instance, an algorithm decides that an image is 70 percent likely to be a cat but the label says “spoon,” then it’s likely that the image is wrongly labeled and actually shows a cat. To check, where the algorithm and the label disagreed, researchers showed the image to more people.

ImageNet and other big data sets are key to how AI systems, including those used in self-driving cars, medical imaging devices, and credit-scoring systems, are built and tested. But they can also be a weak link. The data is typically collected and labeled by low-paid workers, and research is piling up about the problems this method introduces.

The last sentence of that excerpt has been a kind of open dirty secret in the “machine learning” world for some time. That the magic of AI is based on really monotonous and soul crushing work done by humans. Humans who are paid pennies or even fraction of pennies. Although that doesn’t include all the humans working for free by using “captcha” or other forms that claim they are testing to see if you’re a human but are in fact training a machine via your interactions that identify a bus or a traffic light in a set of images.

In this instance it’s not just that humans make mistakes, but that these humans are mistreated and so the mistakes, whether deliberate or not, reflect the working conditions in which the infrastructure of AI was built.

Similarly this business practice has been so universal, and is itself built on a system that optimizes human exploitation, that the entire field of AI is to a certain extent compromised by the data science principle of: bad data in, results in bad data out.

Although, now that we’re understanding this, that the data infrastructure enabling AI is flawed, what will be the response? Rebuild the infrastructure the way a shoddy or defective bridge would be rebuilt? Perhaps for the wealthy and deep resourced, assuming that they can figure out the extent to which their data is corrupted.

Another more likely scenario is a doubling down on the dependence on human labour. With it the open acknowledgement that AI is not autonomous, but rather just a glorified puppet.

Robots are doing more blue-collar work, but behind the scenes an army of human workers help when the AI fails. “They are the human glue that allows that system to function at 99.96 percent reliability," says @mattbeane, an assistant professor at UCSB. https://t.co/5nkCHVsJBE

— Will Knight (@willknight) April 7, 2021

DAVID TEJEDA HELPS deliver food and drinks to tables at a small restaurant in Dallas. And another in Sonoma County, California. Sometimes he lends a hand at a restaurant in Los Angeles too.

Tejeda does all this from his home in Belmont, California, by tracking the movements and vital signs of robots that roam around each establishment, bringing dishes from kitchen to table, and carrying back dirty dishes.

Sometimes he needs to help a lost robot reorient itself. “Sometimes it’s human error, someone moving the robot or something,” Tejeda says. “If I look through the camera and I say, ‘Oh, I see a wall that has a painting or certain landmarks,’ then I can localize it to face that landmark.”

Tejeda is part of a small but growing shadow workforce. Robots are taking on more kinds of blue-collar work, from driving forklifts and carrying freshly picked grapes to stocking shelves and waiting tables. Behind many of these robot systems are humans who help the machines perform difficult tasks or take over when they get confused. These people work from bedrooms, couches, and kitchen tables, a remote labor force that reaches into the physical world.

The need for humans to help the robots highlights the limits of artificial intelligence, and it suggests that people may still serve as a crucial cog in future automation.

The use of the word “cog” here is a bit disturbing.

First we had the myth that AI would result in massive unemployment. What was so sinister about this myth was not just the fear it evoked, but the attention it drew away from the human labour that was already being consumed to build AI.

Now we have the myth that AI is autonomous, and thus we may miss the humans that will become “cogs” in the system in order to make it possible?

The alternative of course would be to foster a hybrid vision that has humans and machines working in harmony. Is that too radical? Perhaps for the present, but it does seem as if humans and machines will be working more closely moving forward.

Another sign that remote robot wrangling is taking off is interest from some startups focused on the problem. Jeff Linnell, who previously worked on robotics at Google, left to found Formant in 2017, when he realized that more remote operation would be needed. “There are all sorts of applications where a robot can do 95 percent of the mission and a person can pick up that slack,” he says. “That's our thesis.”

Formant’s software combines tools to manage fleets of robots with others to set up teams of remote robot operators. “The only way you get to an economy of scale over the next decade, in my opinion, is to have a human behind it, managing a fleet,” he says.

Certainly this vision of humans managing fleets of robots evokes our original argument that the impact of automation will be to increase capacity rather than reduce employment or work.

Although it does raise ethical questions about whether the human is in a position to be responsible for said fleet or just engaging with it. At what point does responsibility (and liability) become difficult and therefore undesirable?

The metaphors at play here are significant and not just symbolic. If we continue to treat our machines as slaves, than how are we treating the humans behind those machines, who are currently treated only marginally better than slaves?

Alternatively if we learn to treat our machines with respect, and use them responsibly, it can help us model and mimic our ideal relationship with our environment, which historically we have not treated with respect or responsibility.

These issues may seem trivial, however they reflect our broader relationships with each other and our world. In that AI provides us with an opportunity to reassess our relationships and our society as a whole.

Nowadays there are so many self called tech gurus showing merely puppets but calling it "robots", advanced AI or, as in this case the so terrifying "skynet".

— Matias Iacono (@MatiasIacono) April 7, 2021