A thought experiment in how edge computing could work

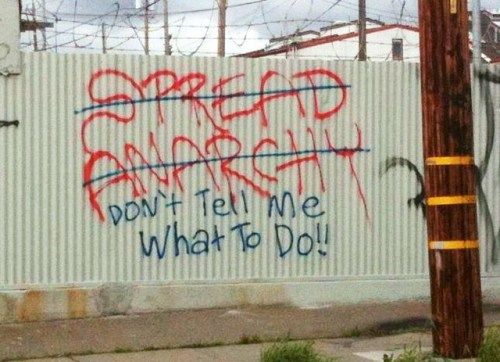

Technology is inherently political as the range of configurations (or lack thereof) inevitably comprise ideology and a desired world view. No technology is neutral, no data is neutral, but rather embedded into their operating principles are the assumptions and expectations of a society.

As a result we should not dismiss any technology superficially, but delve into the question of how can that technology be configured or adapted so as to meet our values for the kind of society we desire.

Part of what makes AI tyrannical by default, is the false assumption that it has to be centralized, unresponsive, unaccountable, and inevitable. Yet none of this has to be true, and there’s no reason why AI can’t be democratized.

Imagine for a moment that we live in such a world, one where AI had been democratized, and rather than feared, it was embraced, and effectively used to empower everyone. What would that look like?

The first step would be distributed data. Imagine that as an individual or an organization, all the data that you generate, or that is generated about you, is stored in a digital container that you control. This container could be located at your home, or in the cloud, it doesn’t matter, so long as you have the keys to this otherwise encrypted data.

You data is governed by a set of policies that you create (based on templates or your imagination) and are able to modify as you see fit. These policies indicate when and where you’re willing to share your data, and under what terms. These policies also inform your own data collection practices, as your container is always in contact with other containers, seeking information that you want or value.

The reason this data exists, is to train and inform your personal (or organizational) AI. Everyone has their own AI, which runs off of dedicated computer processors that they’ve obtained for their purposes. Over time the range and features of these chips diversify, however the general purpose is to enable each user to build and control their own AI.

This is the primary reason why people are able to control their own data repository - which is a mix of their data, and data they’ve gathered from others - so they can control their own AI. They can train the AI to know about them, or to know about any subject they desire, provided the data to train their AI is available.

Anytime an AI makes a decision, data is generated that provides detail and explanation as to how that decision is made. This transparency not only allows the user to see why their AI made that decision, but can also provide a comparative context when it is juxtaposed with all the other decisions other AIs made on that subject. Don’t trust your own AI? No problem, see how it stacks up against the AI used by your family. Is your sisters AI producing different results? Why not check to see what data she has used to train it. Maybe there was a perspective or data set you didn’t anticipate?

Imagine this scaled up across society, as each person has access to their own customized decision making engine. Not only do humans still retain the right to dissent from their own automated decision making, but also the ability to dissent from other people’s automated decision making by sticking with their own.

Aggregate decision making can be possible as all of these AIs combine to debate and decipher the wide range of possible positions.

Of course like any other system, this could be gamed, but it provides a vision of automation that is far closer to the individual, retains their rights and autonomy, while still empowering them with the power of data and analytics.

This vision of the future is partially based on the emerging concept of edge computing:

The new frontier in Edge development from smaller form factor to modular data centres and the software to manage it all. Enabling #ai to run at the #edge and paving the way for the emerging #iotedge and #smartcities use cases https://t.co/09W0G4pBGn via @ZDNet & @ldignan

— Mahmoud Hamouda (@Mahmoud_Hamouda) February 18, 2020

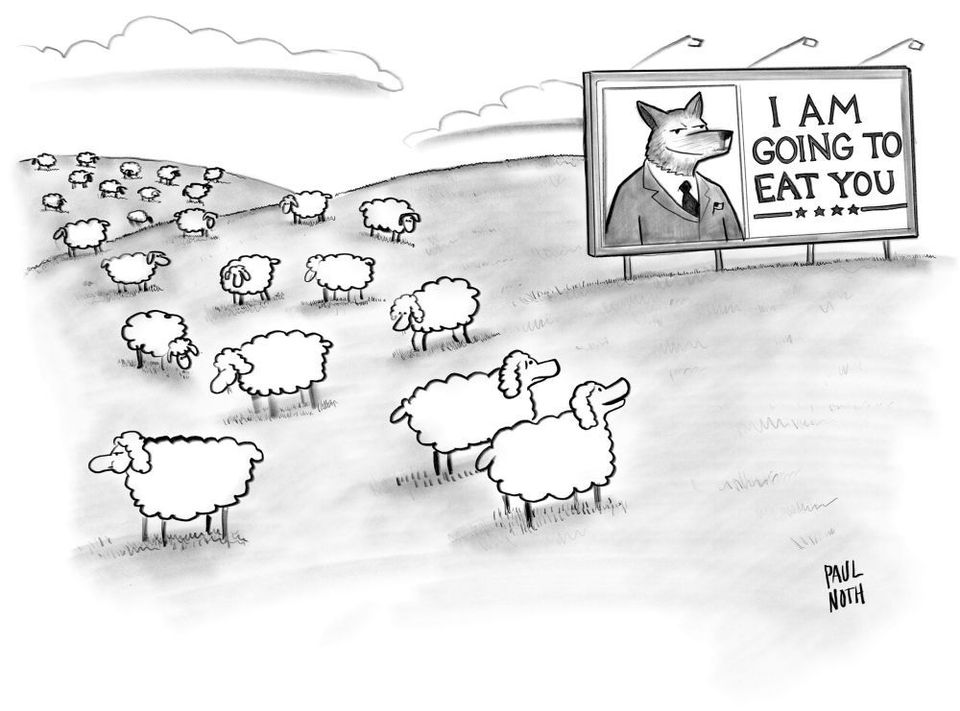

Although most of the language and rhetoric about edge computing focuses on smart cities and industrial infrastructure rather than individual empowerment. The danger in focusing on the system level rather than the user level is that you end up with some really bad user experiences, like this hilarious Twitter thread:

today in sharing economy struggles: our app powered car rental lost cell service on the side of a mountain in rural California and now I live here I guess pic.twitter.com/XoqqMpEwdN

— Kari Paul (@kari_paul) February 17, 2020

six hours, two tow trucks, and 20 calls to customer service later apparently it was a software issue and the car needed to be rebooted before we could use it @internetofshit pic.twitter.com/LZBZQwRJk8

— Kari Paul (@kari_paul) February 17, 2020

also we were able to turn the car back on somehow but now we are afraid to turn it off because it may not start again and Gig told us we used our “allotted restarts” of the car so we are on a literal endless road trip through California now

— Kari Paul (@kari_paul) February 17, 2020

As someone later in the thread said, and I will paraphrase: You can check out of the California Ideology any time you like, but you can never leave.